Humans possess an exceptional ability to visualize 4D scenes, encompassing both motion and 3D geometry, from a single still image. This ability is rooted in our accumulated observations of similar scenes and an intuitive understanding of physics. In this paper, we aim to replicate this capacity in neural networks, specifically focusing on natural fluid imagery. Existing methods for this task typically employ simplistic 2D motion estimators to animate the image, leading to motion predictions that often defy physical principles, resulting in unrealistic animations. Our approach introduces a novel method for generating 4D scenes with physics-consistent animation from a single image. We propose the use of a physics-informed neural network that predicts motion for each point, guided by a loss term derived from fundamental physical principles, including the Navier-Stokes equations. To reconstruct the 3D geometry, we predict feature-based 3D Gaussians from the input image, which are then animated using the predicted motions and rendered from any desired camera perspective. Experimental results highlight the effectiveness of our method in producing physically plausible animations, showcasing significant performance improvements over existing methods.

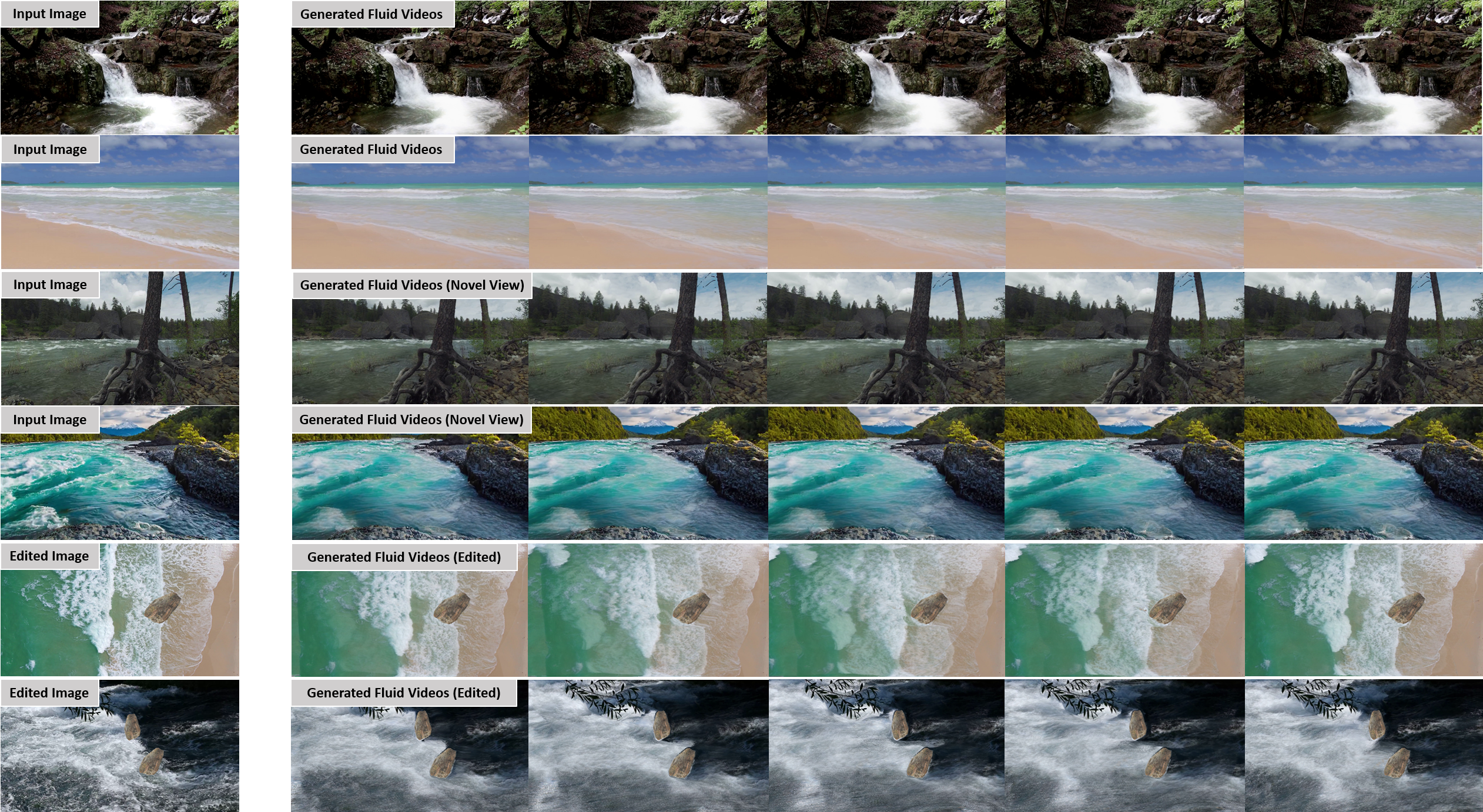

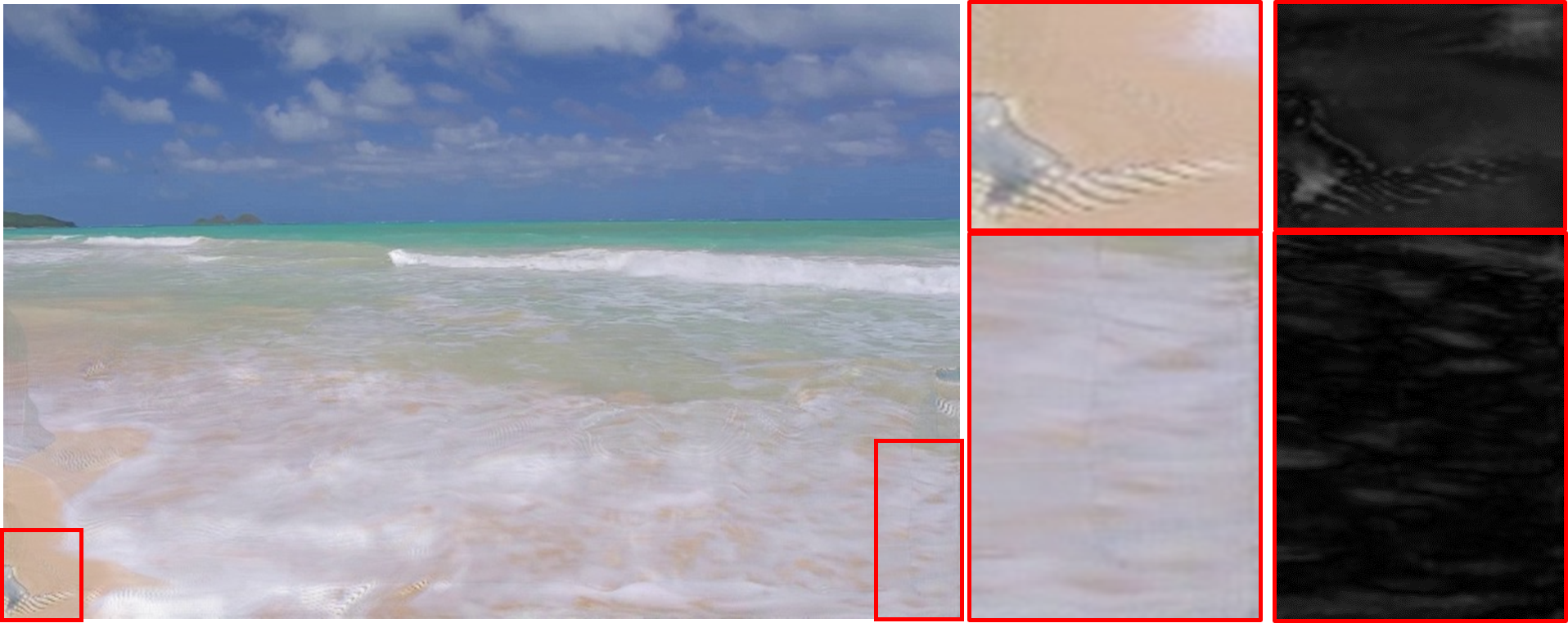

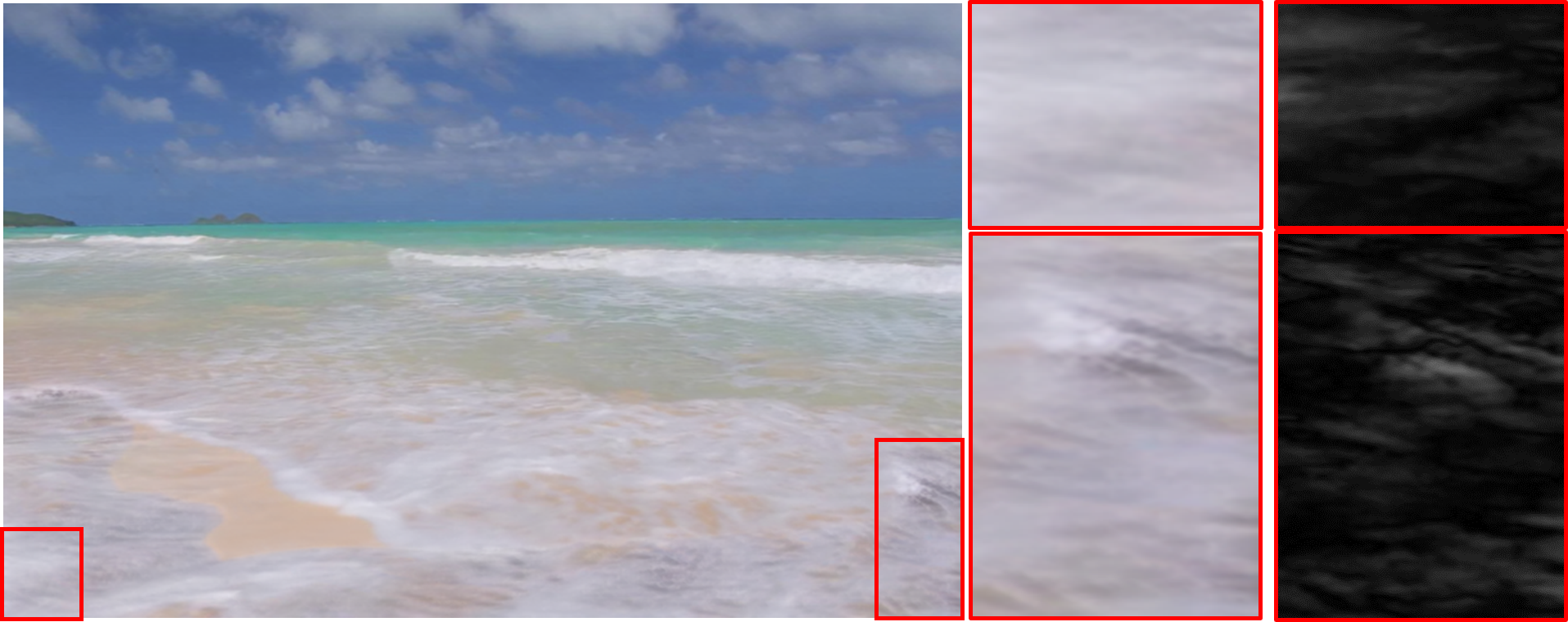

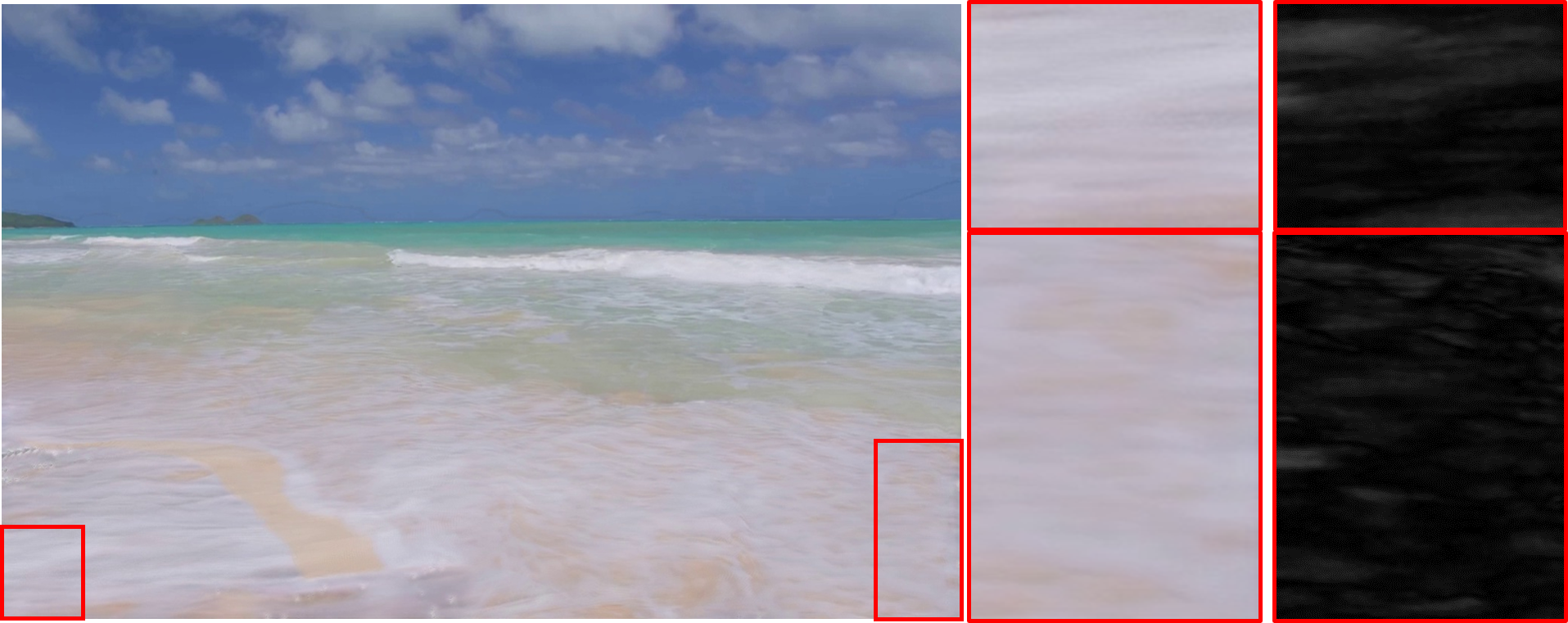

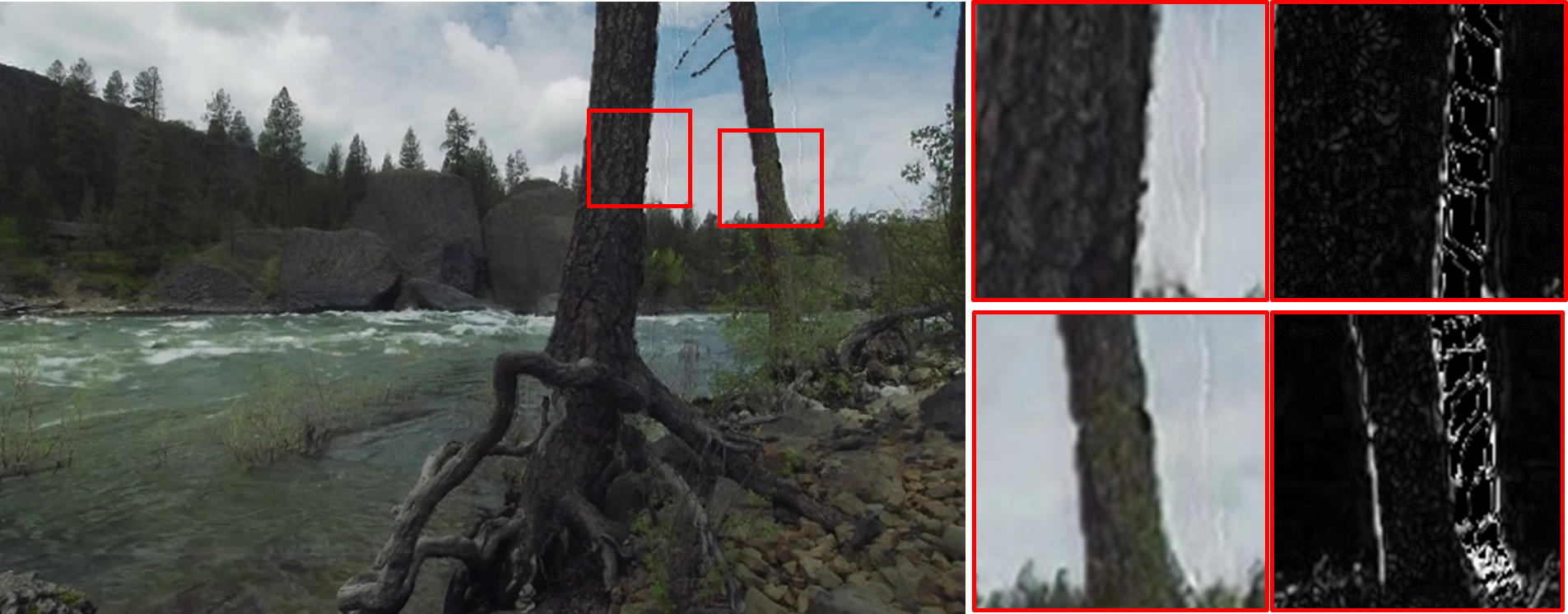

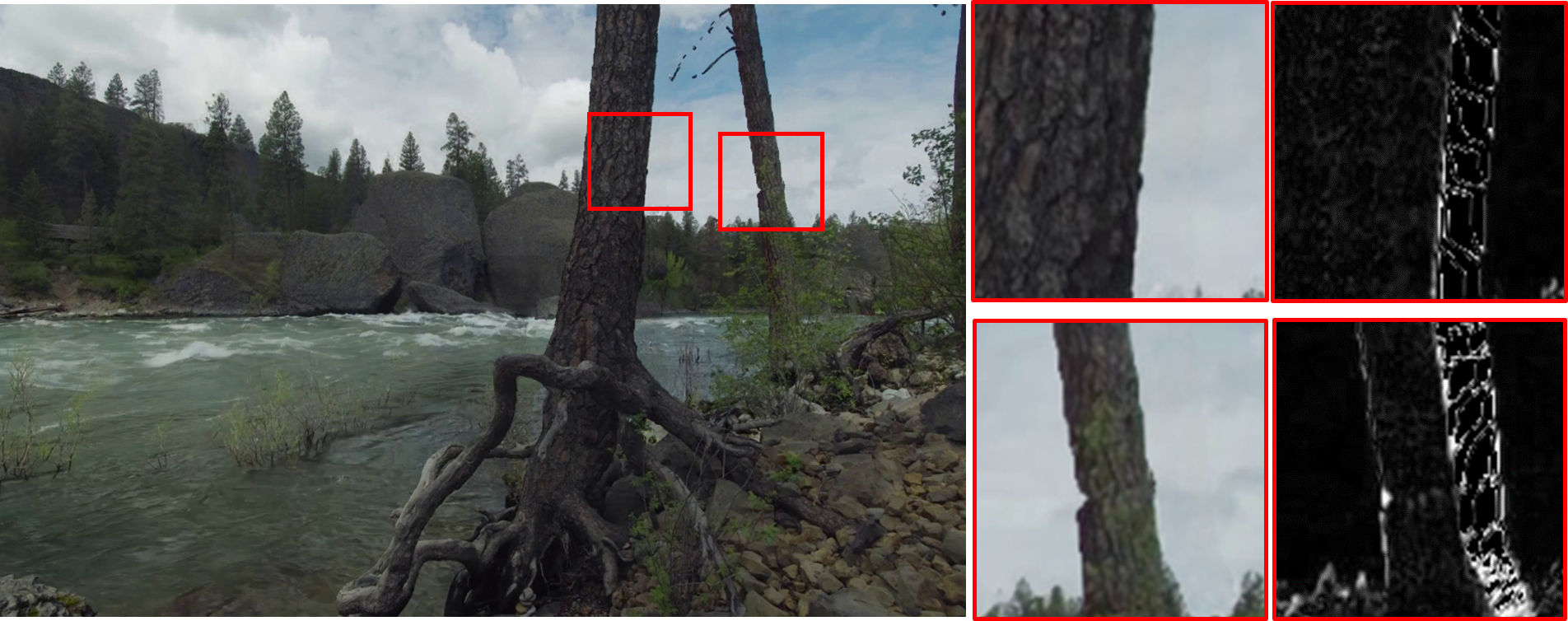

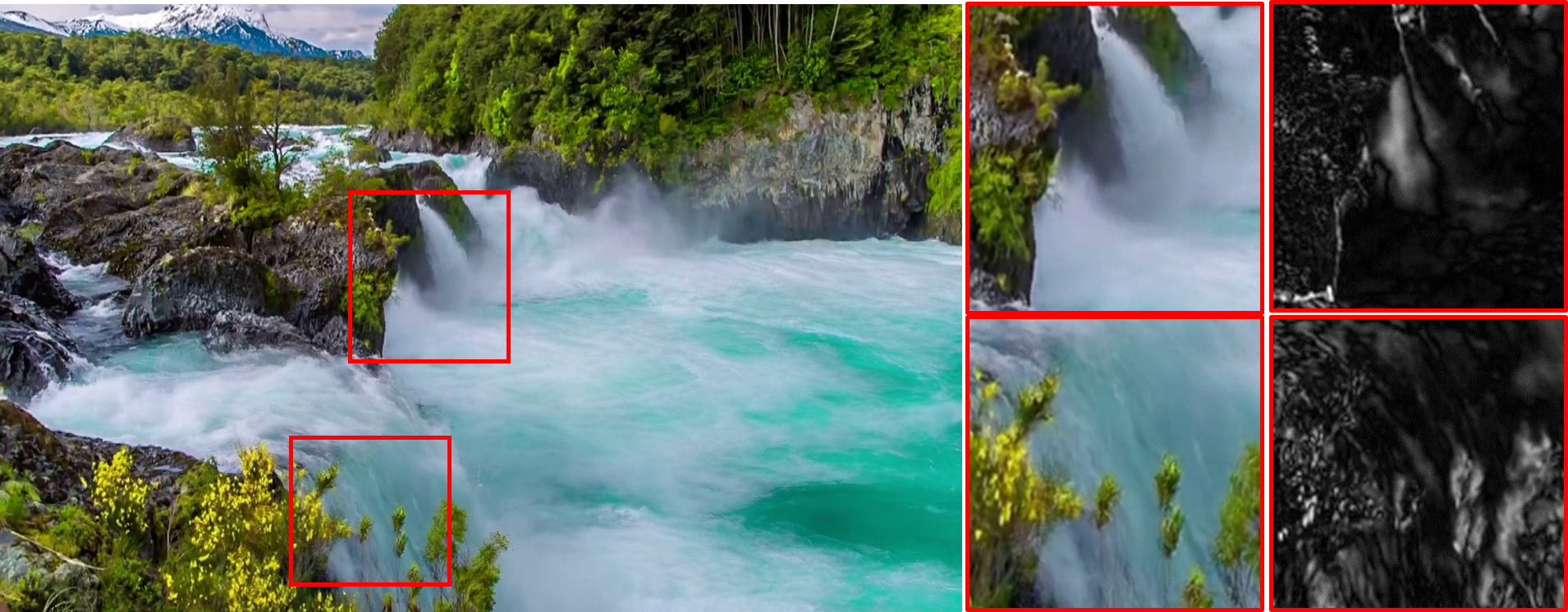

Fig 2: Qaulitative results on Holynski et al. validation set from the input view.. Our method produces compelling results, while others exhibit artifacts such as holes, dots, and mosaic patterns. Zoomed-ins and deviation maps are also provided for better visualizations.

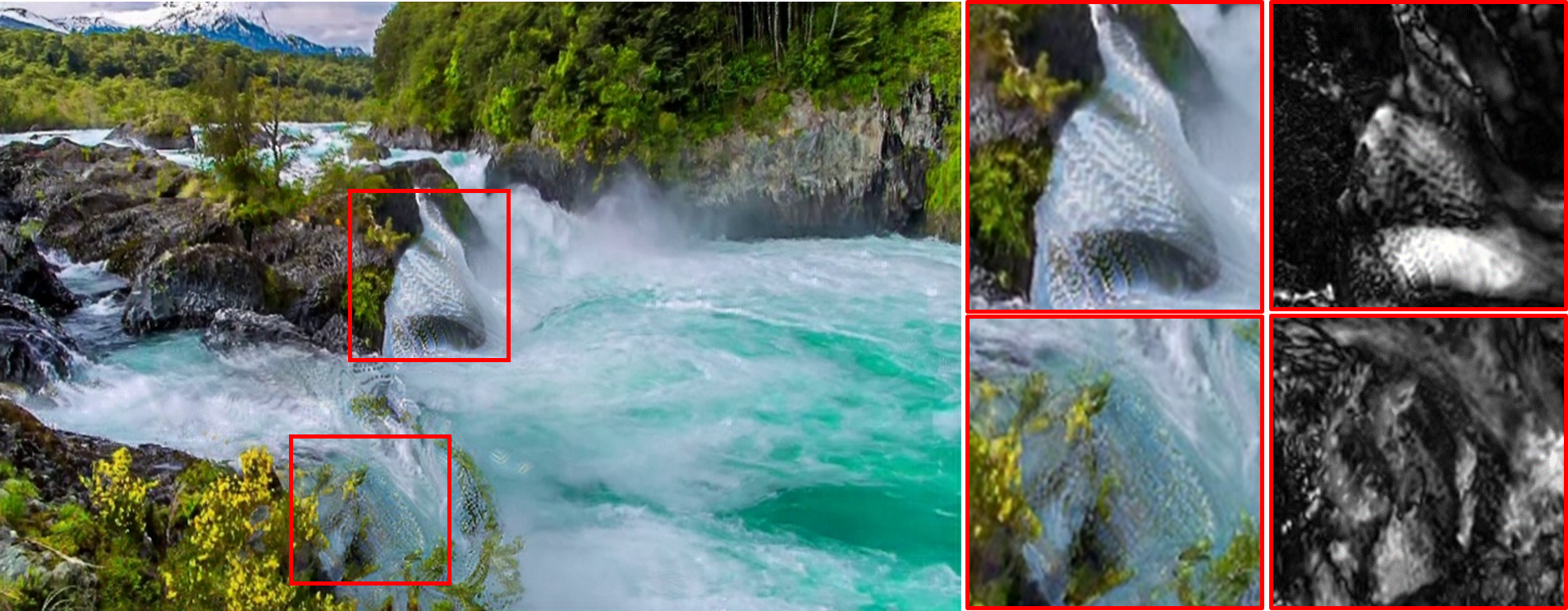

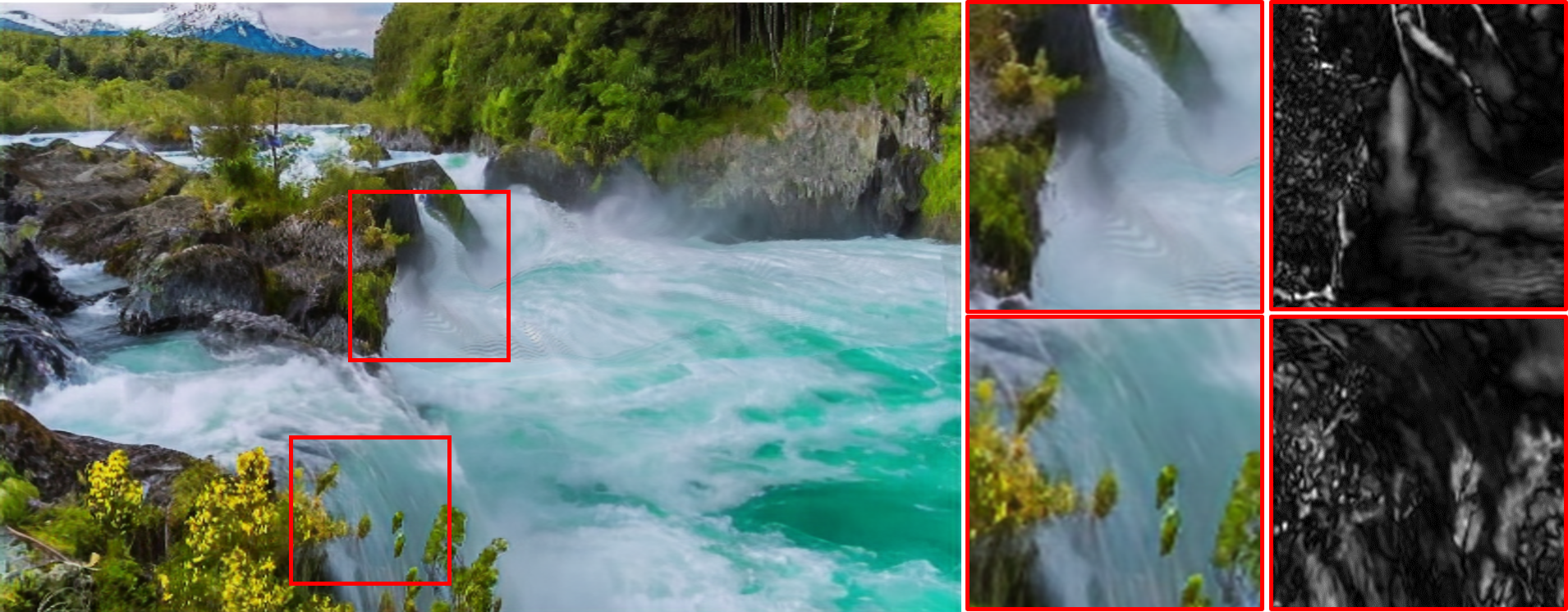

Fig 3: Qaulitative results on Holynski et al. validation set from novel views. Our method produces compelling results, whereas other methods exhibit the same artifacts present in videos from the input view, along with new artifacts in occluded areas.

| Method | PSNR ↑ | SSIM ↑ | LPIPS ↓ |

|---|---|---|---|

| Holynski et al. | 22.11 | 0.64 | 0.34 |

| 3D-Cinemagraphy | 22.81 | 0.70 | 0.22 |

| Ours | 24.98 | 0.78 | 0.20 |

Table 1: Quantitative results of generating videos from the input view on Holynski et al. validation set.

| Method | PSNR ↑ | SSIM ↑ | LPIPS ↓ |

|---|---|---|---|

| 2D Anim→NVS* | 21.12 | 0.63 | 0.29 |

| NVS→2D Anim* | 21.97 | 0.70 | 0.28 |

| 3D-Cinemagraphy | 22.46 | 0.67 | 0.24 |

| Make-it-4D | 21.40 | 0.55 | 0.31 |

| Ours | 24.34 | 0.76 | 0.21 |

Table 2: Quantitative results of generating videos from novel views on Holynski validation set. The results in lines marked with '*' are retrieved from 3D-Cinemagraphy.

| Fluid Regions | Other Regions | |||||

|---|---|---|---|---|---|---|

| Method | PSNR ↑ | SSIM ↑ | LPIPS ↓ | PSNR ↑ | SSIM ↑ | LPIPS ↓ |

| Holynski et al. | 26.10 | 0.82 | 0.18 | 24.50 | 0.72 | 0.32 |

| 3D-Cinemagraphy | 26.30 | 0.82 | 0.15 | 24.25 | 0.75 | 0.27 |

| Ours | 27.50 | 0.86 | 0.13 | 25.92 | 0.81 | 0.12 |

Table 3: Quantitative results for fluid and non-fluid regions, respectively.